Explorations in failure - trying to get a LLM to detect LLM generated content on HIVE

There has been some discussion about AI and LLM generated content on HIVE. The overall consensus appears to be that LLM and AI content is bad, but I have yet to see any conclusive, wide spread analysis of the recent content on HIVE.

An unironic,AI / LLM generated image as a headline picture

There are websites like GPT-Zero that are able to "detect" if something is written using AI, using some sort of probability analysis. Tools like GPT Zero are closed source, paid, and subscription only, and as one human, I am unlikely to reach the level of sophistication that their models use. Firstly, I'm not a linguist, and I am also not an expert sys admin, software engineer, or developer.

I have the ability to run several Local LLMs on my machine, and figured I would experiment, using hive content to try and detect the likelihood of content on HIVE having been written by a LLM.

I am a single man with a casual interest in these things, and the ability to write some Python to hook into other software tools that I have access to.

I started by installing LM Studio, and downloaded a few different models. There is not much recent information published online about the best models for detection, particularly with the advent of many hundreds of models, fine tunes, and the sheer numbers of text generators that now exist on the Internet.

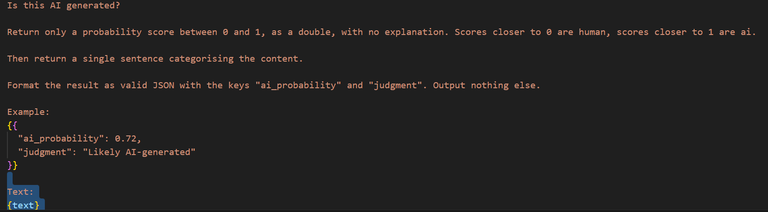

As a result, I experimented with a few models. I got a Python script to hook into LM Studio's local API (as in, a server running on my computer) - and fed it the prompt:

Given that the samples I fed this are the same used for my weekly analysis on post length, I have a file with something like 50,000 HIVE posts stored locally on my machine. I got the script to feed 100 randomly sampled examples into the LLM, and for it to give me a score.

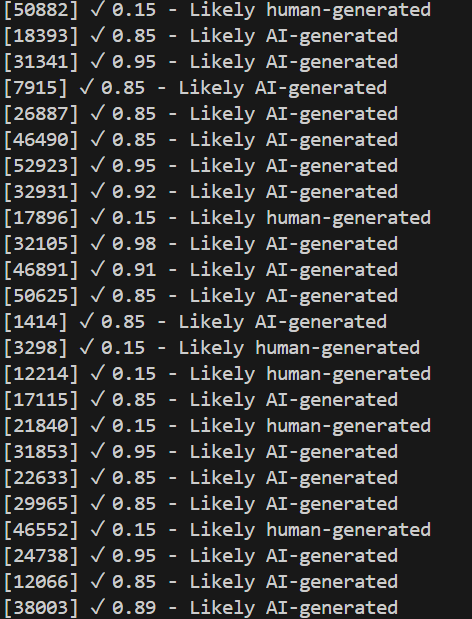

The console output was something like this:

This shows the row number, the probability and a judgement.

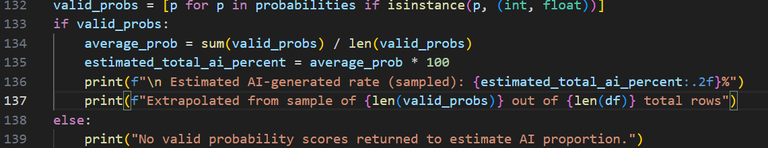

Once 100 results are completed, the script does some mathematics to normalise the 100 random samples to the whole data set.

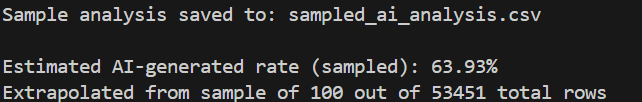

Based on the 100 samples that the script randomly selected:

I am fairly certain that human written content on HIVE comprises of more than 63%, but I could be wrong. The issue here is the volume of posts, the volume of text, and the computational resources available to assess this.

I then went and looked through the 100 randomly sampled rows, and there were some interesting findings:

- The AI categorised some human written content (albeit with low levels of mastery of the English language) as AI, instead of human

- The 100 samples contained many generated posts by various known bots, indicating that the data must first be cleaned further before analysis

- There are posts in languages other than English that become sampled, it is unclear if the LLM is taking into account other languages when completing its probalistic analysis

- It could all be a massive hallucinated result, not grounded in reality.

There are also questions as to the ability of one LLM to detect output of another LLM.

So, I encourage people to use their eyes, their brains, their hands, their corporeal appendages, and their thoughts to examine content on HIVE closely, and ask "Does this seem to enter the uncanny valley of LLM output?"

And if you think that it does - call the author out on it. They may be using a translation service, or they may have tried to mask AI writing in their post.

If you call them out and they change their habits - GOOD. If you call them out and there is no response - then you have a choice to accept that content, or to do something about it.

People who care about their own integrity and the integrity of the platform itself will change their habits. If they don't - then, are they the sort of people we want on HIVE anyway?

Disclosure: some of the scripts used for this post (not shared at this time) were generated using LLM in the interest of bringing this analytical process to life far quicker than I could have using my own coding "skills" alone.

The full code, if anyone is interested:

import requests

import json

import random

import time

import re

CSV_INPUT = "Concat.csv"

CSV_OUTPUT = "sampled_ai_analysis.csv"

TEXT_COLUMN = "body trim clean"

AUTHOR_COLUMN = "author"

LM_API_URL = "http://localhost:1234/v1/chat/completions"

MODEL_NAME = "qwen/qwen3-8b"

SAMPLE_SIZE = 100

MAX_TEXT_LENGTH = 2000

# Attempt to load the CSV with fallback encodings

encodings = ['utf-8', 'ISO-8859-1', 'utf-16']

for enc in encodings:

try:

df = pd.read_csv(CSV_INPUT, encoding=enc)

break

except UnicodeDecodeError:

continue

else:

raise ValueError("Could not read the CSV file using standard encodings.")

# Check required columns

if TEXT_COLUMN not in df.columns or AUTHOR_COLUMN not in df.columns:

raise ValueError(f"Required column(s) not found: '{TEXT_COLUMN}' and/or '{AUTHOR_COLUMN}'.")

# Sample 100 random rows

sampled_df = df.sample(n=min(SAMPLE_SIZE, len(df)), random_state=42).copy()

# Helper to extract JSON content

def extract_json(text):

match = re.search(r'\{.*?\}', text, re.DOTALL)

if match:

try:

return json.loads(match.group())

except json.JSONDecodeError:

return None

return None

# Create the prompt

def make_prompt(text):

return f"""

Is this AI generated?

Return only a probability score between 0 and 1, as a double, with no explanation. Scores closer to 0 are human, scores closer to 1 are ai.

Then return a single sentence categorising the content.

Format the result as valid JSON with the keys "ai_probability" and "judgment". Output nothing else.

Example:

{{

"ai_probability": 0.72,

"judgment": "Likely AI-generated"

}}

Text:

{text}

"""

# Output containers

probabilities = []

judgments = []

texts = []

authors = []

# Process each sampled row

for idx, row in sampled_df.iterrows():

text = str(row[TEXT_COLUMN])[:MAX_TEXT_LENGTH]

author = row[AUTHOR_COLUMN]

messages = [

{"role": "system", "content": "Respond only with valid JSON, do not explain."},

{"role": "user", "content": make_prompt(text)}

]

payload = {

"model": MODEL_NAME,

"messages": messages,

"temperature": 0.2,

"max_tokens": 100

}

try:

response = requests.post(LM_API_URL, json=payload, timeout=30)

response.raise_for_status()

content = response.json()["choices"][0]["message"]["content"]

parsed = extract_json(content)

if parsed and "ai_probability" in parsed and "judgment" in parsed:

probabilities.append(parsed["ai_probability"])

judgments.append(parsed["judgment"])

else:

probabilities.append(None)

judgments.append("Could not parse")

texts.append(text)

authors.append(author)

print(f"[{idx}] ✓ {parsed['ai_probability'] if parsed else 'N/A'} - {parsed['judgment'] if parsed else 'Parsing failed'}")

except Exception as e:

probabilities.append(None)

judgments.append(f"Error: {e}")

texts.append(text)

authors.append(author)

print(f"[{idx}] Error: {e}")

time.sleep(0.5) # throttle

# Create final DataFrame

output_df = pd.DataFrame({

"author": authors,

"body": texts,

"ai_probability": probabilities,

"judgment": judgments

})

# Save the sample analysis

output_df.to_csv(CSV_OUTPUT, index=False, encoding='utf-8')

print(f"\nSample analysis saved to: {CSV_OUTPUT}")

# Compute average AI probability across the sample

valid_probs = [p for p in probabilities if isinstance(p, (int, float))]

if valid_probs:

average_prob = sum(valid_probs) / len(valid_probs)

estimated_total_ai_percent = average_prob * 100

print(f"\n Estimated AI-generated rate (sampled): {estimated_total_ai_percent:.2f}%")

print(f"Extrapolated from sample of {len(valid_probs)} out of {len(df)} total rows")

else:

print("No valid probability scores returned to estimate AI proportion.")

This is one of the downsides of artificial intelligence: it kills creativity and encourages laziness. It would be nice if a platform like Hive had a built-in tool that would screen articles before accepting them for publication, although I agree that the analysis results of some detection tools may not be entirely accurate.

I don't think that chain level analysis and rejection of content would ever be possible or viable - plus, I would dislike the impost that additional hardware and emissions would place upon witness node operators.

We can sift through the endless oceans of trash, though - and make sure we keep our "beaches" as pretty as possible :)

I read that LLMs are ridiculously prone to using the em dash (—) in their work. I wonder how much content of hive has that 😀

I find it easy to recognise and always call it out, it's ridiculous how many use the accusation of you or hive in general of being backward as a defense. Cocks be that they are

I used to use the em dash all the time - a favourite of mine! I've had to curb it's use lest people suspect I am not real.

Holy kak. I don't mind admitting that I genuinely didn't know what it was till I read about AI loving it! 🤣🤣

I didn't know that's what they were called either!!!!

They certainly are. They also like to say things like this - then say not only that, instead. Or something like that - using words like "Testament" and a whole bunch of other tells. AI also likes to work in triplets, but that's something that good speeches have always had, and guess - (oh fuck another em dash) it was probably trained on that sort of content.

It probably was. It's quite funny how it churns out such similar formatting which I suppose just exposes it's training at least for the moment!

I don't think it will be long before being able to tell will be harder and harder

Thanks for this good post, @holoz0r - I love that someone more tech-minded than myself is exploring this, and that Hive might even in the future be capable of filtering AI content.... 🪷

It is incredibly disappointing to see how ubiquitously AI-generated text and imagery are being sooked up into the internet, and it does feel wrong that Hive in particular is showing up so many examples of it: it hurts my eyes and offends my senses. 🙏 😵💫

Humans are losing their ability to reason, to cognise and to create, by leaning into such tools that insert themselves between our sentience, and our hands and actions.

I try not to be a part of this Descent, by going further into feeling and expression, and deeper into wild Nature.... @vincentnijman and I often discuss how we can make our lives more analogue, less embroiled in the digital quagmire.

Keep grounded, everyone!

Your reply is upvoted by @topcomment; a manual curation service that rewards meaningful and engaging comments.

More Info - Support us! - Reports - Discord Channel

Yes! LLMs are removing that cognition and agency, the same way in which the doom scroll, doesn't actually give a person what they want, it merely gives them a poker machine of content, and when they "find" something, the payoff is the joy chemicals, and they think, Oh, maybe I'll get that again - instead of thinking about - what do I want to look at? What do I want to read?

Sure, I've discovered some interesting things myself while doomscrolling, that i would have never searched for in the first place - but those that rot away doing this could have much more fulfilling intellectual "diets" if they searched for fulfilment, instead of trying to stumble upon on it.

Greetings @holoz0r ,

Thank you for addressing this subject.....what a thorough post...more than most of us could do....Thank you!

@asgarth may be quite interested....here's hoping he can tell us if something is in the works to address AI and LLM...here at Hive.

Oh yes... it's true....especially when one is used to commenting and such...we can discern the automated, assisted chat.

There is hope for Hive....I became sure of it...after the backlash toward one of the witness's AI Agent recently.

Kind Regards, Bleujay

Generally speaking I'm not part of the group that want to completely remove LLM content from Hive.

I see that as a very uphill battle that will get more difficult by the day.

From my point of view is better to educate both creators and curators so they use the tools at their disposal in a way that will benefit the community. Anyway let me tag @jarvie here as he may have some additional feedback to share.

Thank you for your candid and kind response @asgarth ....appreciate it very much.

Education is definitely one of the important elements of using any tool. Some people simply don't read, they just copy paste the response the LLM outputs, its further prompting and all - without any respect or care for their original intention.

When we lose the human intent, and there's no "editorial" , I think that's when things get sad for those of us who do value that intentionality of content creation.

OK here's my additional feedback for him and anyone else...

I think that Hive would benefit from higher and higher quality content. What if we said "Hive: The blockchain where the most interesting content lives" I think quality of content benefits Hive more than just a lot of effort from lots of users. Let's get a ton of people coming to all these interfaces because they're so interested in the content.

I think we probably could be benefited more as a blockchain if we focus more on getting really good content here. That was hard years ago to attract the best content creators and writers. We hoped that "true ownership" alone would do the trick or that the reward pool would be enough but $50-100 wasn't quite enough for those elite creators. Now the world has changed since then...

It's understandable where we are: We have a lot of users who aren't trained professional writers and many times write in languages that are not their own and try to make very personal content to make sure people know it's original, because that's valued more than level of content. If you're close or invested in that person it's great but if you don't have a vested interest in that person or their vote won't return back to you beneficially it's not... so for every other reader there isn't a lot of interest when it's not high quality content in their book. Medium and substack are focused heavily on being a place where you get top writers writing stuff people want to read and follow along a certain writer/thinker.

We simply haven't attracted the world's best or most interesting writers so we need to find something else that will work for us, We have smart and resourceful people that will be consistent especially if they see a pattern that works and they're technically inclined for the most part. We should be encouraging users to use the tools that will make their content better and better. Good long form really interesting content.

I personally want more really really good, thought provoking and dynamic content to read (on topics i like)... there does happen to be tools that can help create really really good content. But also it can create really boring or predictable content by the lazy or unimaginative. (it's just a tool)

And we won't have to worry much at all about copyright issues if people learn how to do things right. Think about it: consistent really top notch and interesting content if they learn how to harness the power, if we hold tons of content that is really top notch we become a place where lots of people around the world come to read and learn.

Everyone pick subjects they love and have a headstart in understanding and they get better and smarter on those subjects and push out the best content on those things.

Just sayin...

... we can still keep the personal interaction stuff as many people may not want to put in the effort to do a lot of work on really well crafted documents or just want to have a place to hang out sharing with friends. That doesn't have to go. Connections are important for people as well.

Great reply! I have one point of contention though - if there's high level content that I am really interested in, I am going to want to become "social" with that person, because I am going to learn a lot of stuff from them.

I made a post on a photographer's content here, a few weeks ago. They've since posted several times - I asked a question about their technical process that I would've loved to learn more about.

They didn't reply. But that, I suppose, is the Internet.

I am not far less likely to click through to that person's content, because I would've loved that answer. I would have loved learning more about their discovery of their style and process, but I didn't.

We have to ask people about their motivations for being here as well. For some, it is easy to articulate and be honest about that. For others, Hive is just a means to an end.

We'll never stop people wanting to use LLM content - the issue I have is the stuff that is absolute crap, obviously generated, and adds absolutely no value. We already have humans doing a lot of that, we don't need LLMs to do it more efficiently.

eg: The posts that are a person rambling about what a 0.00000007% move in the btc price has to do with the sovereignty of cows in the inner mid-west of the US because some farmer has a BTC mining rig in their back barn, and winter is coming, therefore, they'll gather 'round it for warmth ... and -

LLMs are just tools. People can use them responsibly, or they can circle the drain of their own creations, as people who are "smart enough" will be able to poke holes in the content, or the argument, and know when to not engage.

Having said that, in "good hands" LLMs have the potential to be devastating to people who don't choose to adapt to the rapidly (and incredibly rapidly increasing) tide of technological "innovation" that we will witness over the next few years).

Heck, there may even be an anti LLM renaissance, who knows?!

Cool. Which models can you run locally?

Do you have a Mac with tons of ram or what?

I ran this on my RTX 4090, so stuff that fits within 24GB VRAM, but that does severely limit the types of models that you can run, and the token size you can feed.

You can run a fair few. They range from "yeah, that's neat" to "please, stop giving me your word salad". Commercial options and large models probably remain inaccessible unless you're using a Mac, as you suggest, with tons of fast, integrated memory.

LM Studio (its available on Mac, Linux and Windows!) was pretty performant under Linux and Windows where I tested it. My Macbook only has 24GB of ram (and its a bog standard M2 air, otherwise) is incredibly slow compared to the 4090 for any AI workloads - but I think the newer chips and more RAM are a lot more performant.

LM studio also tells you within its interface what can be offloaded entirely into memory, to avoid huge amounts of swap, and uselessly small token / context.

Awesome thanks 👍

At some point I think we'll have to get used to consuming mostly AI generated content (and liking it!) since it'll be so much more efficient to generate it than to have content created by humans. So long as there's value in this kind of content, there shouldn't be a big issue with AI created content, should it? From my short time on this platform, however, I haven't yet understood the relationship between a content quality and number of upvotes and rewards. There's probably some auto voting going on or something

Oh there absolutely is auto voting going on, and that saddens me. I have done analysis on post length as an indicator of quality in the past, and that doesn't seem to have a correlation.

https://peakd.com/hive-133987/@holoz0r/hive-post-rewards-and-word-counts-22-jul-29-jul

From my few days here so far it seems there's fairly little manual voting going on. I suppose that's because votes are somewhat of a limited resource as far as I can tell. But it would be cool if people just treated votes like likes - "I like it so I upvote". I guess because there's a financial element to it people become very strategic about their votes

I think because a lot of people automate - this makes their manual votes rarer.

For manual votes to work, people need to actually open their eyes and engage with the content!

Very interesting experiment. I wonder what kind of computer you use to process this data. And many more questions though, but you have already summed them up yourself.

I wonder if systems will be able to detect AI usage in the near future since it will get better and better in mimicking natural language.

But it is surely good that this is on the agenda.

Do you have any further plans with this 'project' of yours?

I used my desktop computer!

I installed LM Studio, downloaded a few models that would fit in my RTX4090 VRAM, then did some scripting (with the ironic) help of a LLM to build and test the code before I refined it a little bit.

I don't think I have plans to do this for "other" stuff, given it took about 30-40 seconds to process per post, even batching it from a csv into LM studio. The 4090 is no computational slouch, but the limit of how many tokens (words) I can feed it locally gets in the way of probably doing good detection on longer posts.

Particularly those in multiple languages, using translation services possibly hitting false positives, but I think in my case, the LLM was just throwing darts at the wall and hoping (can a LLM hope?) that no real due diligence or interrogation of the output occurs.

That, I think is the real danger of LLM - not checking the output, not scrutinising the work, and blindly making decisions.

That is is. I'm involved in the AI usage policies on our company and checking thenputputnis the nr1 rule.

I used to advise those people too at my old job, not the only thing on the pile of guidance!

My favourite was don't use AI just to use AI. :D

That's a good one too!

Call me realist, pessimist or a cynic, but AI is here and it's not only not going away, but it will escape detection eventually. The only way forward is acceptance and adaptation.

Of course, not all adaptation is equal - people who are so lazy that they have AI do everything for them, including leaving comments is just idiotic. It's low-effort, inauthentic, mundane garbage that isn't passing the smell test, not even by half-literate such as myself.

The other way, is to use AI as an 'editor' for polishing and translating ideas and text. If one does it right then I see it as an 'acceptable' common ground - where effort is still applied by the author, but it is enhanced with the use of AI.

Also, as a visual artist, back in the day, I used to collaborate with authors and help them make Headline pictures, but now, not only is my skill obsolete, I myself turn to AI at times because it's so damn time-saving.

Let's remember the past for a second, painters use to hate early photographers... "those images are dead" they'd say, "its cheating", "it's 99% garbage to every 1% good photo". But look, they each found their lanes, the painters reinvented themselves, and Photography also found its place in the arts. AI - like the camera - is just a tool. The real art comes from the vision of its user.

So if anyone wants to use AI, please go ahead, but do it in a way where it's artful, sophisticated, stylized to be uniquely yours... maybe train your own model that reflects your inner creator - I don't know, but I think there is a way.

Your reply is upvoted by @topcomment; a manual curation service that rewards meaningful and engaging comments.

More Info - Support us! - Reports - Discord Channel

I can't believe that a talented, allegorical painter like Paul Delaroche said that famous quote "from this day forward, painting is dead". If only he was still alive (a probably 200 year old husk) today, and see how people revere his work even today, and that people still fleck oil onto a canvas with the goal of expression.

Maybe we don't have neo-classical painters like him producing enormous, detailed canvas, but there will always be a place.

I think LLMs (given how it is structured and the vast amount of compute required), will certainly kill the search engine - but then what happens to the underlying websites that are being searched for, indexed, and "lovingly" (though the Internet has not been a place of love for quite some time with rubbish like SEO ruining writing) - created sites.

I really do miss the "old" Internet, geocities, people's janky, broken HTML with image maps, and all of their passion on show. Now, it is just a click farm for advertising and outrage.

Hive, I feel is one of the last lines of defence against that, or at least, its tiny International stadium sized user base is resistant to the bullshit.

LOL indeed someone should make a parallel internet, free of AI and SEO, where users can go back to those pre-search-engine algorithms. and also bring back myspace and 9gag to complete the internet of that era.

It doesn't need to be parallel. That implies the lines on it mirror those of the Internet. I'd rather it be a piece of clear film, placed over the top of the Internet, a shimmery glow of humanity in an otherwise robotic, industrialised zone.

I never used myspace and 9gag. I used geocities, and if you're interested in this notion, there's a fucking fantastic YouTuber - Sarah Davis Baker - who has produced some amazing brain food about this very topic.

Please watch it, and be intellectually stimulated and amazed. I wish people like this Sarah would write essays for HIVE. I'd happily devour them!

Watched, really insightful... although how old is she to have been using Geocities? she looks in her 30's at most.

Myspace was all about creating a 'persona' too - though it had some set structure - you could customize almost everything, and throw in gifs, music to play in the background of one's profile, and people also had funky names. but eventually everything got bought and sterilized - its how you get Facebook - no character at all.

Sorry I confused 9gag with "Something Aweful" - remember that one?

Something like live journal, then. You see, in the MySpace era, I was busying myself talking on MSN messenger, playing computer rpgs, making digital art, reading, and enjoying obtaining a broad education while having delusions of fitness thay never arrived.

As to her age, im not sure it mattered, I started using a computer and the Internet when I was six, and had my first one at home when I was 10 or 12.

Yeah I've done this and some people blank you, deny whole heartedly (no worries, I always say look I could be wrong and I'm not having a go but....) and in one case, absolutely gorgeous nuts at me and down voted me for a week in retaliation.

But it's always best to say 'look, I can tell I think, is this where you wanna go?'. Most people check their own integrity. It's okay to deny in embarrassment and then not do it again imo.

Interesting the end result was 'fuck who knows for sure?'.

Or maybe I'm just not good enough at using LLMs.

What a typo, or Freudian slip, go back to your peanut butter, you human!

There's a lot of obvious AI slop in new. That is where I like to torment myself in search of new authors to read, I'll let you know when I find interesting, fleshy sacks of emotion and content.

Ditto.

Goes! Goes nuts!!

But I like gorgeous nuts.

Also.

Content.

I am at the gym, listening to the next book (as opposed to reading) in Mr Vandermeers Southern Reach. It is great, so far.

Chances are I walk home in the rain, too. Good start to a Monday.

I will likely read that today. Synchronized reading. I twinged my back. Can't move. Maybe too much peanut butter

The AIs/LLMs are getting better so it's getting harder for people in general to tell.

Accusations of using AI or "compliments" about "being as good as" AI (pro-tip for people that think they're giving a compliment when you say this, a number of artists would see it as a grave insult) seem to be a bit more common now as a result (it's not a new problem for digital artists, we've been getting "the computer is doing it all for you" since that became a thing XD), because someone happens to be actually good at what they do.

I had a sudden conspiracy theory that the LLMs are doing deliberately bad code so that you have trouble detecting LLM generated stuff which was much more entertaining than the more logical LLMs are also not brilliant at coding and have the same problems as everyone else of being able to tell what they generated XD

"It was beautiful code, but none of it worked" :)

That's the typical output I get when I try to get AI to code stuff. Had it even confuse the basic operators

||(Or)&&(And)Maddeningly, when I told the AI it was wrong (it had used Or, instead of And) - it denied being wrong :D I don't know why I told it, not like it would change its mind (which it doesn't have)!

Denied being wrong when it was wrong? Definitely learning from humans XD

Your reply is upvoted by @topcomment; a manual curation service that rewards meaningful and engaging comments.

More Info - Support us! - Reports - Discord Channel

My problem with things like GPT-Zero and its like is that if the writing has any modicum of decent use of language or grammar, it's AI. Because people are dumb. Ninety percent of college-level papers that can be proven to be human-written get flagged as AI-written.

I have not tested my master's thesis in gpt-zero, but some of my other writing often gets "20% AI" scores when I check it.

I wonder if there are actual linguists working on the detection models. I doubt it, but I cbf doing that research, because my .. wonder gets me distracted from whatever it is I am doing at the time. :D

Look! Squirrel!